For the past several years, Artificial Intelligence has placed a clear marker on healthcare. The watershed in any hospital is when they adopted AI to accelerate their protocols seven-fold. From streamlining diagnostics, to management algorithms, from point of care systems to mass-data information system-silos, in every ward, from oncology to orthopedics, pathology/labs to the ICU, AI is not only becoming commonplace, it perhaps may even supersede the traditional healthcare professional, traditional meaning: human beings.

In imaging, this is certainly becoming the case. At present AI is helping with earlier detection, diagnosis, staging and segmentation, with findings presenting at time of diagnosis, rather than 3-4 weeks later. AI can perform clinical tasks that once needed the most expert of trained readers. The major issue was, AI was being constrained to singular modalities (one of X-Ray, MRI, PET, SPECT, Ultrasound).

In the future, AI tools will be used to predict future events, be it therapeutic response, prognostication, adverse events, future metastasis. These are events indistinguishable to the naked human eye, regardless of level of expertise. It will eliminate the uncertainty of ‘Just where do we look?’. And to best perform this, it will need to use every modality we can feed into it.

Multimodal AI Biomarkers

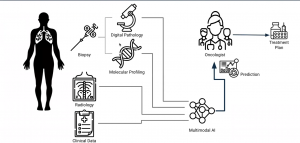

AI is now able to understand biopsy, pathology and genetic readings, work with images from PACS/DICOM, feed this back to the radiologist, oncologist, pathologist etc, and store it in the RIS/HIS/EMR/Oncology system, to not only determine the best course of action based on retrospective data and the latest clinical data (from NICE, EMA, FDA), but also within hospital set protocols. This autonomy will free up the MDT to perform procedures or discuss other more pressing matters (indeed this would have been a godsend during the COVID era).

The challenges for AI to perform this today include: there is limited data available, and this data is scattered throughout a hospital ecosystem. Also, multidisciplinary teams sometimes do not have the faith to rely on AI, and still feel it essential to conduct long meetings to determine patient pathways. AI has become the junior doctor, trying to fight for its voice to be heard.

How can multimodal data enable predictive AI?

The problem with AI, argue many physicians, is that there are the highest of stakes in putting your trust in it: a patient’s life. Predictions guiding therapeutic strategy can have significant impact on patient outcomes. We are leveraging properties that are inherently subvisual. Predictions cannot be checked by human readers in the same way that a normal diagnosis can. Trust and interpretability is crucial.

A strategy for this is to utilize multimodal correlation in:

- interpretation (provide biological basis of imaging biomarkers, through multi-scale. Multimodal correlation with tumor biology), and

- Use case: a radiology signature to guide targeted therapy in cancer care, that is also predictive of genotype and histologic phenotype.

What about multi-scale complexity?

How will a tumor respond to treatment? We find this information nowadays through the culmination of numerous factors (ECOG, TNM, lymph node status, comorbidities, age, previous regimen, biomarkers etc,). Also, if standard of care draws from a number of modalities to make treatment decisions, is it always feasible to do it well from one modality alone? What if AI misses something?

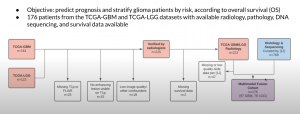

A strategy to address this is through multimodal fusion. That is, constructing a multimodal deep-learning framework for combining modalities according to their strengths for improved fused prediction. For example, orthogonalization-based deep fusion of radiology, digital pathology, sequencing, and clinical data for glioma prognostication.

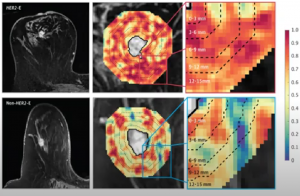

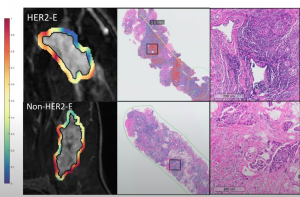

A use case can be seen in HER2-targeted therapies for Breast Cancer (trastuzumab, pertuzumab, neratinib, TDM1, Tdx). Over half of patients receiving expensive HER2-targeted therapies will not respond. Current molecular profiling, can subcategorize HER2+ tumors into response-associated molecular subtypes (e.g. HER2 enriched), however this is equally costly and invasive. This where radiomics may be able to help.

Radiomics

Radiomics is the mining of radiology images through feature extraction and machine learning techniques to enable clinical prediction. Nathaniel Braman (‘Predicting cancer outcomes with radiomics and AI in radiology’- 2017) demonstrated there is textural heterogeneity outside a tumor, however this is a poorly understood predictor of treatment outcome. AI can assist with this.

AI radiology signatures can be trained to predict subtypes like HER2+ molecules:

And can be used to predict targeted therapy response: In Braman’s study, 42 HER2+ patients were assessed, including HER2-E and other subtypes. HER2-E signature directly predicted response in 2 external test cohorts (AUC=0.79, n=28, AUC=0.69, n=50)

AI was also used to predict associated lymphocyte density/distribution (R=0.57 within subset of 27 patients with available biopsy samples)

Multimodal fusion:

AI models focused on radiology, pathology, molecular profiling, or clinical variables separately have shown promise for predicting patient outcomes. Clinical decision making considers all of these data streams holistically. Their combination in a machine learning framework could boost performance by exploiting relative strengths. Indeed, pivotal previous multimodal fusion work (Chen. et al, Mobadersany. et al,) showed strong benefit when combining biopsy-based modalities, but not radiology.

The challenges to this are lower data availability, and inter-modality correlations, that can create redundancy and reduce benefit of adding modalities.

An example clinical dataset was assessed in breast cancer (Braman, Radiology and digital pathology, 2017). The patient selection flowchart can be seen below:

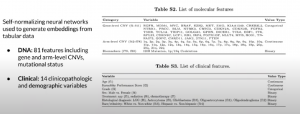

Orthogonal fusion of radiology, pathology, and DNA was also utilized:

As was unimodal embeddings:

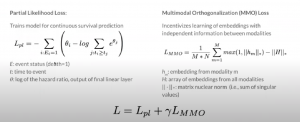

Important also was assessment of loss functions:

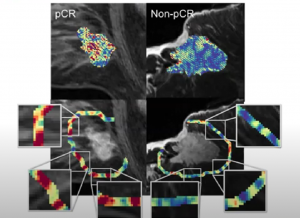

You can see below the sampling strategy and patient-level prediction in radiology and pathology:

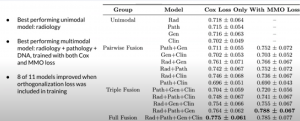

The results can be seen below:

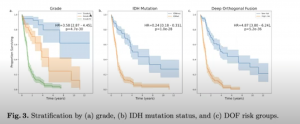

With a comparison of risk stratification vs clinical markers:

The results showed a multimodal approach was clearly needed to develop predictive Artificial Intelligence imaging biomarkers that effectively leverage the full spectrum of oncologic data currently guiding care, and build clinical trust through an understood basis in multi-scale tumor biology.

Through multimodal correlation, the study developed a radiomic signature that could simultaneously identify breast cancer’s molecular subtypes, and also responsiveness to targeted therapy, and immune phenotype on histopathology.

Through multimodal fusion, they fused radiology, pathology, and molecular data into a predictive framework, which improved performance by incentivizing independent contributions from each modality.

Now we know its clinical effectiveness, how can we advance multimodal biomarkers?

- More public multimodal datasets for benchmarking and development

- Better techniques for combatting modality missingness

- Better tools for unsupervised/supervised learning to make use of large pools of data without outcome labels,

- A multidisciplinary collaboration is essential.

AI is here to stay; we just need to trust in it, and let it do its job.

MDForLives is a global healthcare intelligence platform where real-world perspectives are transformed into validated insights. We bring together diverse healthcare experiences to discover, share, and shape the future of healthcare through data-backed understanding.