AI in Healthcare: Where Clinicians Trust It, and Where They Don’t

A clinician reviews an alert between documentation, another notification, and a patient waiting.

The system suggests an action. The clinician pauses.

When AI conflicts with your clinical instinct, what do you do first?

AI is now embedded in routine care. The real question is trust when stakes rise. Clinician-reported data shows a clear pattern: AI is consulted often, but deferred to selectively. This article explains where trust holds, where it breaks, and why.

Note: This article is based on clinician-reported research conducted across the USA, UK, Canada, France, Germany, and Italy.

How much do clinicians trust AI in healthcare?

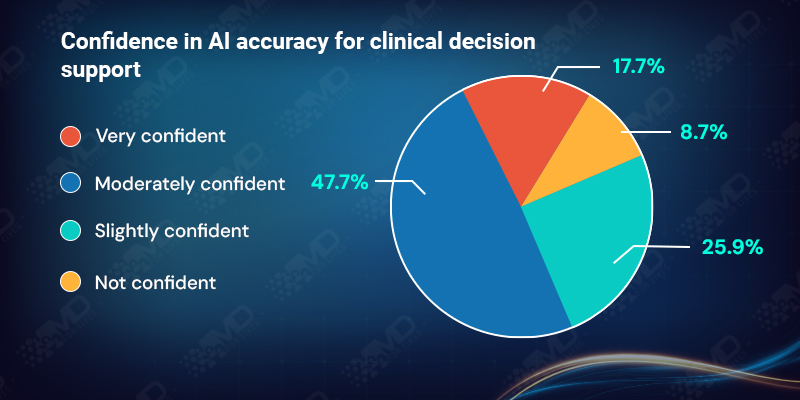

Clinician confidence in AI accuracy remains measured rather than assured.

Moderate confidence dominates in real-world practice. This matters because it reflects conditional dependence. Clinicians are willing to review AI outputs but hesitate to rely on them when decisions carry material clinical risk.

Confidence levels vary across countries, but the data does not support a simple “higher trust vs lower trust” narrative by region. Some smaller European samples show higher reported confidence.

This is not rejection. It is conditional reliance.

Why clinical validation defines trust in AI?

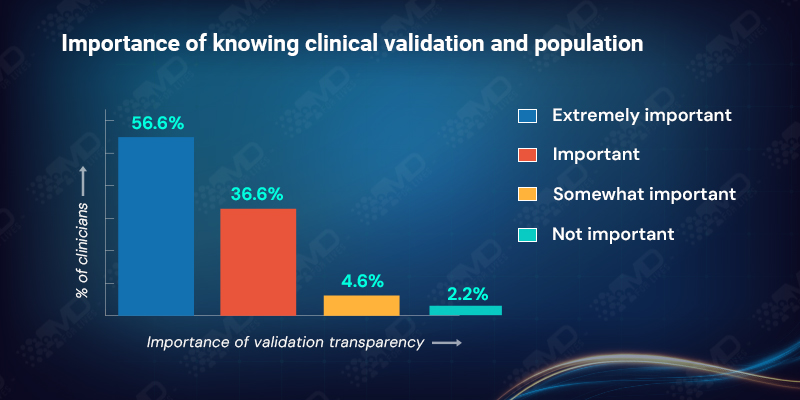

Validation transparency emerges as one of the strongest trust drivers in the dataset.

93.2% of clinicians say it is important or extremely important to understand how an AI system was clinically validated and on which patient populations.

For clinicians, validation is not abstract. When clinicians talk about “validation,” they are usually trying to answer a small set of practical questions before trusting an output in practice:

- Whether the model was externally validated

- Which populations and care settings were represented

- How subgroups performed

- Whether performance drift is monitored over time

Without clarity on population and setting, clinicians cannot judge transferability. Trust weakens when validation details are unclear, even if headline accuracy appears strong.

Transparency enables judgment; opacity forces caution.

“I use AI every day, but I don’t hand decisions over to it. If I can’t see how it was trained or why it’s making a recommendation, it stays a reference point, not a decision-maker.”

Dr. Maya Reynolds, Senior Consultant, USA

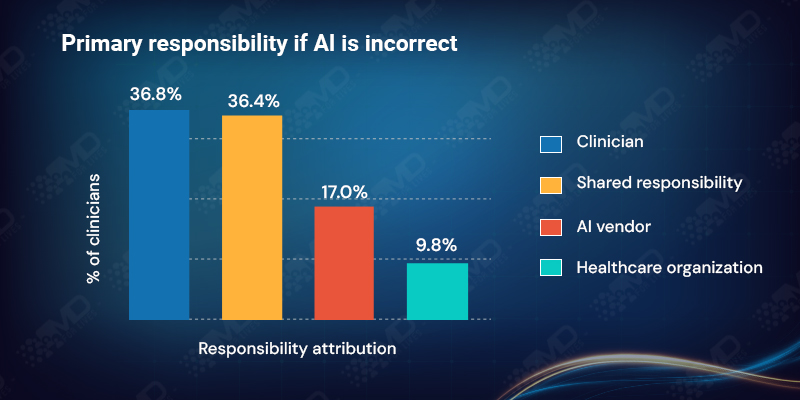

Who is accountable when AI fails in clinical practice?

Accountability reveals one of the most important tensions in AI adoption.

When asked who should be primarily responsible if an AI recommendation is incorrect, clinicians reported a fragmented view:

Responsibility is not being cleanly transferred. Clinicians still feel close to the risk, but accountability is distributed across humans, systems, and vendors.

This fragmentation directly affects trust. In clinical practice, authority and accountability are tightly linked. When responsibility is unclear, deference declines.

As long as accountability is fragmented and close to the clinician, deference will remain limited.

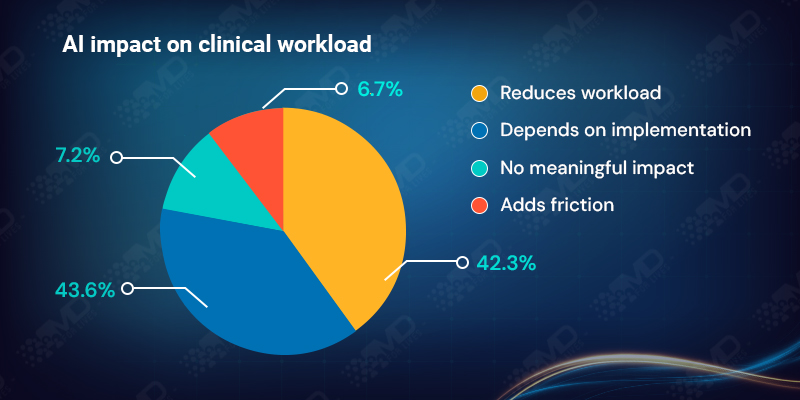

Does AI actually reduce clinical workload?

Clinicians report mixed experiences

Efficiency gains are implementation-dependent, not automatic.

The “depends” category highlights that workload relief is driven less by the technology itself and more by how it is integrated into clinical workflows. In some settings AI streamlines tasks; in others it adds friction through extra clicks, duplicate documentation, or misaligned processes.

AI may save minutes, but it can cost attention.

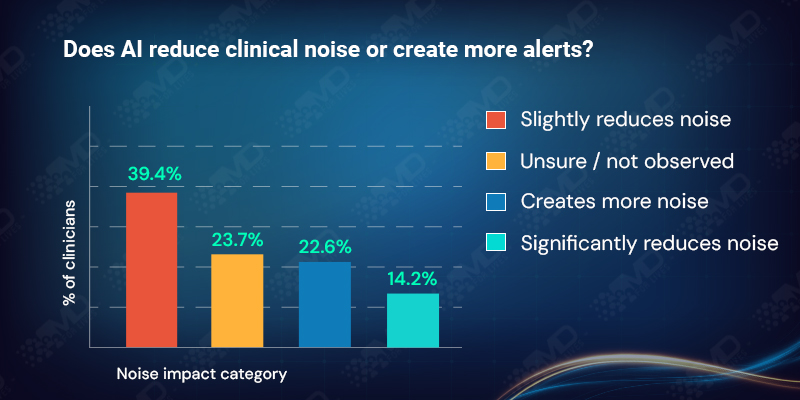

Is AI reducing noise or worsening alert fatigue?

Alert fatigue remains a visible concern.

Roughly one in four clinicians report added noise, and nearly one in four are unsure, signaling uneven exposure or inconsistent deployment across settings. Uneven exposure, limited deployment, or tools running in the background without clinician visibility.

Among clinicians who report increased noise, higher mental effort is also more commonly reported, reinforcing the link between alert design and cognitive burden.

Trust improves when AI reduces noise, not adds volume.

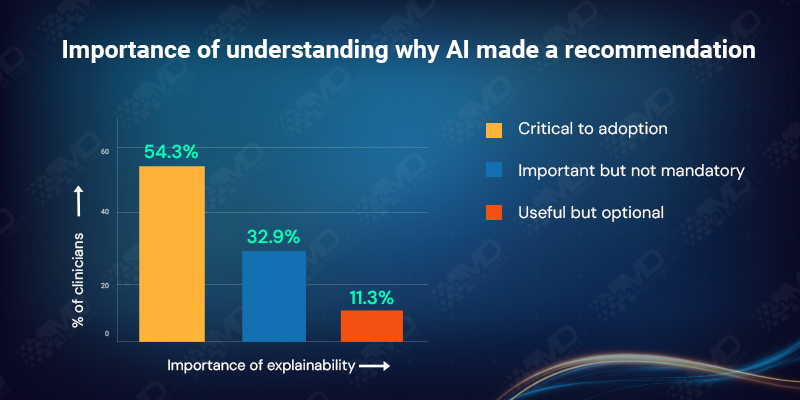

Why explainability remains critical for AI in healthcare?

Explainability strongly shapes adoption.

87.2% of clinicians say understanding why an AI made a recommendation is critical or important.

This reflects the need for defensibility. Clinical decisions must be explained to colleagues, institutions, patients, and sometimes regulators. Accuracy alone is insufficient if reasoning cannot be articulated.

Clinicians often report preferring defensible logic over opaque accuracy, especially when clinical risk is non-trivial.

Trust follows reasoning, not just results.

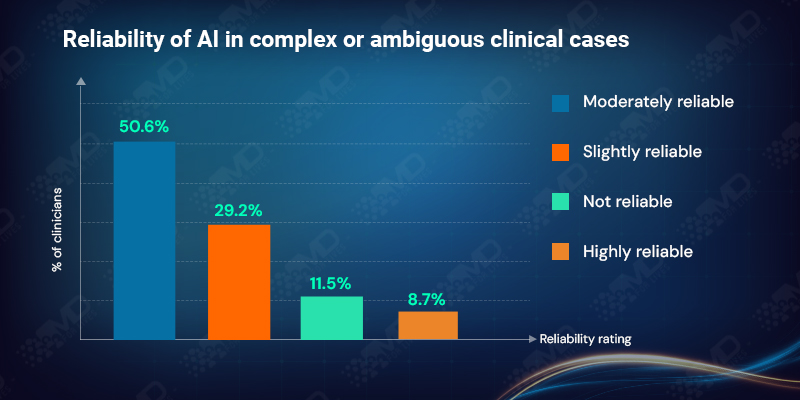

Can clinicians rely on AI in complex clinical cases?

Confidence drops sharply as case complexity increases. As ambiguity rises, the need for defensibility and responsibility rises with it.

This is where the trust gap is most visible. AI is strongest where ambiguity is limited and stakes feel lower. As uncertainty rises, clinicians retain final authority.

Despite frequent positioning of AI as a tool for complexity, clinicians across regions continue to reserve judgment in these cases.

AI informs complexity; clinicians own it.

“AI can save time on straightforward cases, but in complex ones it often means more checking, not less. I still have to think the case through as if the AI wasn’t there.”

Dr. Claire Bennett, Attending Physician, UK

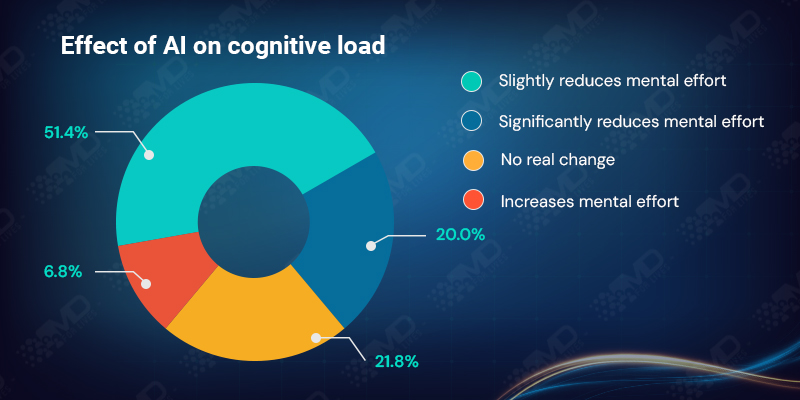

Does AI reduce cognitive burden for clinicians?

Not consistently.

28.6% of clinicians report no reduction or an increase in mental effort:

Cognitive burden appears in the form of extra checking, parallel reasoning, and second-guessing outputs. When AI adds cognitive steps instead of simplifying decision-making, adoption stalls.

Automation does not equal cognitive relief by default.

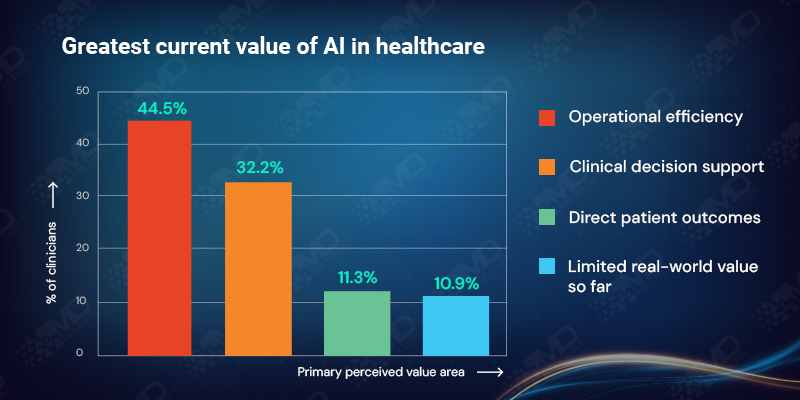

Where clinicians see real value in AI today?

Perceived value remains primarily indirect.

Clinicians largely view AI as an infrastructure enhancer rather than a direct driver of outcomes. Benefits are mediated through workflow, adherence, and implementation quality. This does not mean outcomes do not improve, but that outcomes are not where clinicians most clearly feel the impact yet.

Value is workflow-first, not outcome-first.

What clinician insights reveal about the future of AI in healthcare?

Across sections, a consistent picture emerges:

- Use is increasing

- Trust remains conditional

- Accountability stays human

- Workflow fit determines adoption

- Cognitive burden limits scale

AI is embedded in practice, but trust has not advanced at the same pace. Clinicians are not resisting AI; they are defining the conditions under which it can matter.

Closing Perspective

AI is present in healthcare.

Clinical trust is still catching up.

If AI is everywhere but rarely trusted at the edge cases, what kind of tool is it becoming in practice?

Until validation is transparent, accountability is clearer, and cognitive load is reliably reduced, AI will continue to inform decisions without leading them.

Hope this insight added value.

Participate in the survey below and add your voice to the conversation.

About Author : MDForlives

MDForLives is a global healthcare intelligence platform where real-world perspectives are transformed into validated insights. We bring together diverse healthcare experiences to discover, share, and shape the future of healthcare through data-backed understanding.